Electromagnetic articulography (EMA)

The EMA method is an internationally standardised method for collecting articulatory data. The movements of the articulators (such as tongue, upper and lower lip, jaw) of test subjects are recorded directly during speech.

lectromagnetic articulography works according to the physical principle of electromagnetic induction. An inhomogeneous magnetic field is generated (transmitter coils) in which the positions and speeds of sensors (receiver coils) placed on the articulators can be localised.

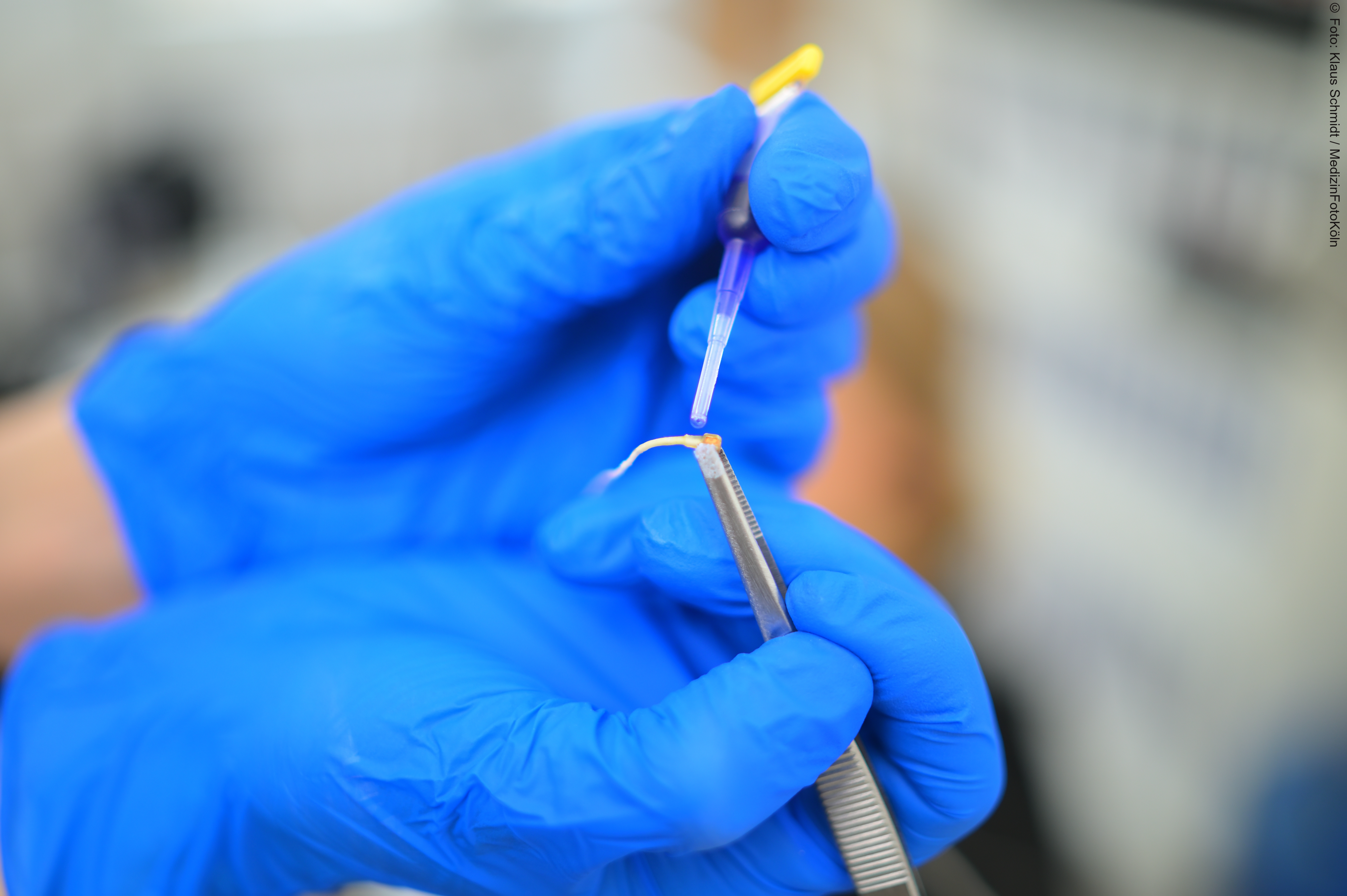

The sensors are attached to the places of articulation using medical tissue adhesive with a respective dimension of approx. 3x3x2 mm on the lower lip, upper lip, tip of the tongue, back of the tongue and chin articulators. This is a standard procedure for EMA measurements. Three additional reference sensors are positioned on the root of the nose and behind the left and right ear to enable natural head movements to be calculated during the recording. The movement of the coils can be displayed on all three levels (e.g. forward and backward movement, up and down movement and right and left movement of the back of the tongue) or as a three-dimensional signal. In addition, the tilt angle of the coils on the articulators provides two further analysis dimensions, which can be of interest when analysing laterals, for example. In addition to the temporal and spatial tracking of articulation movements, a temporally synchronised acoustic signal of speech production is recorded. The EMA method enables very good temporal and spatial recording of the coordination of speech movements. This is particularly important for tongue movements, which are not visible from the outside and therefore cannot be captured using simple imaging techniques.

Hardware:

Carstens AG 501 (16 channels) and a Carstens AG501twin for dual measurements of dialogues, http://www.articulograph.de/Carstens Medizinelektronik GmbH

Software:

ema2wav-converter: ema2wav is an open software for the conversion of articulographic data. It is platform-independent and enables movement trajectories (lips, tongue, jaw) to be added to and analysed in the phonetics software Praat. It was programmed as a collaboration between the IfL Phonetics and the CNRS/Sorbonne Nouvelle. You can find the software here: https://github.com/phbuech/ema2wav

MATLAB, R, EMA2SSFF converter: Conversion of EMA data to SSFF (e.g. for the free software EMU-webAPP and Praat)